H2O MLI Resources

- Values: {

explainability

}

- Explanation type: { local surrogate global surrogate partial dependence plot ICE plot shapley value sensitivity analysis }

- Categories: { model-agnostic }

- Stage: { in-processing post-processing }

- Repository: https://github.com/h2oai/mli-resources

- Tasks: { classification }

- Input data: { tabular }

- Languages: { Python }

- References:

This repository by H2O.ai contains useful resources and notebooks that showcase well-known machine learning interpretability techniques.

The examples use the h2o Python package with their own estimators (e.g. their own fork of XGBoost), but all code is open-source and the examples are still illustrative of the interpretability techniques.

These case studies that also deal with practical coding issues and preprocessing steps, e.g. that LIME can be unstable when there are strong correlations between input variables.

In particular, there are helpful examples for:

- Global Surrogate Models (example with Decision Tree Surrogate) [notebook]

- XGBoost with monotonic constraints [notebook]

- Local Interpretable Model Agnostic Explanations (LIME) [notebook]

- cf. LIME

- Local Feature Importance and Reason Codes using LOCO [notebook]

- Partial Dependence and ICE Plots [notebook]

- Sensitivity Analysis [notebook]

There is no license provided in the repository, just a request to cite original paper authors and the H20.ai machine learning interpretability team where appropriate.

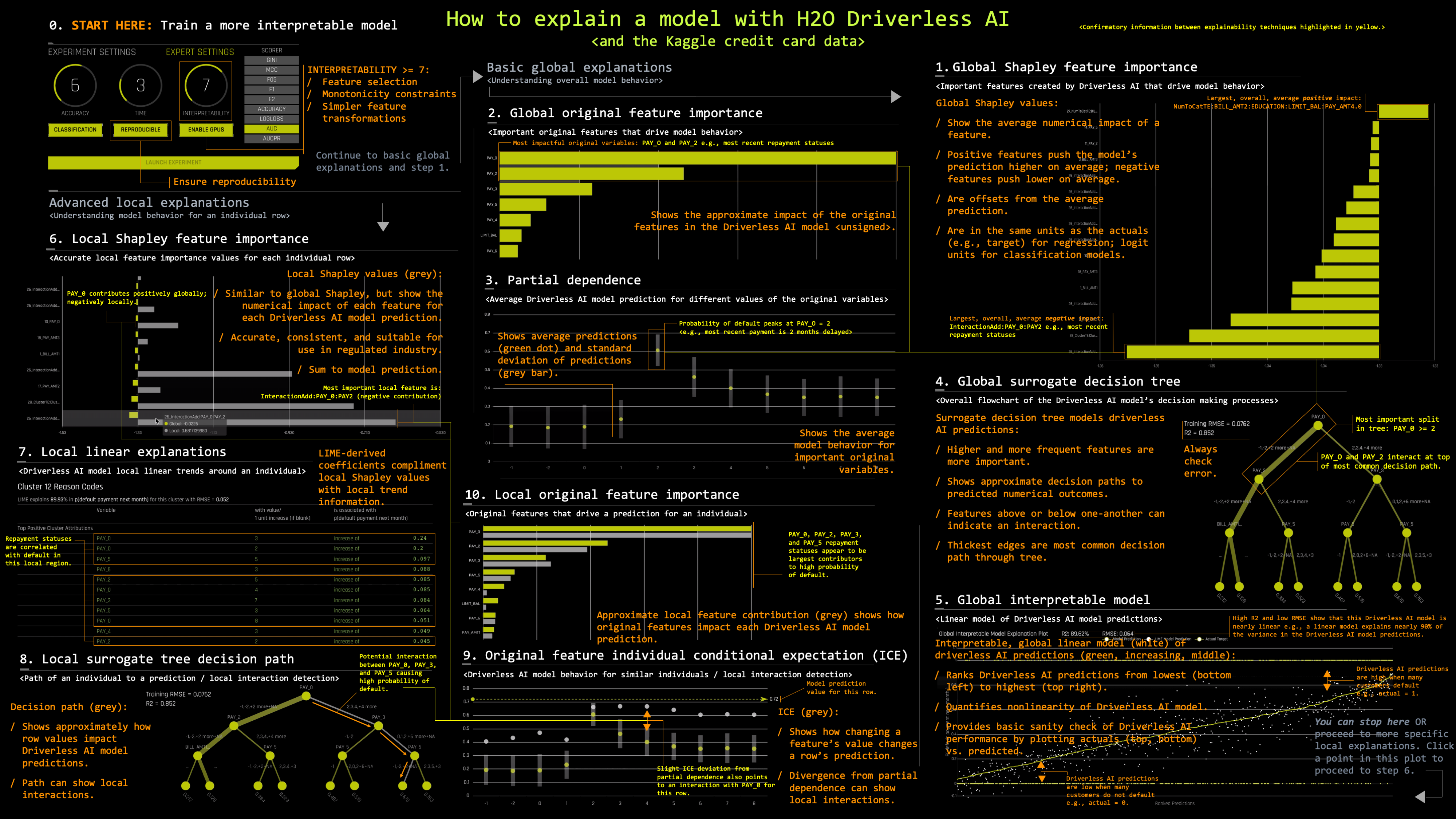

The repository also contains the following interpretability cheatsheet: