ART: Adversial Robustness 360 Toolbox

- Values: { security }

- Categories: { model-specific model-agnostic }

- Stage: { preprocessing in-processing post-processing }

- Repository: https://github.com/Trusted-AI/adversarial-robustness-toolbox

- Tasks: { classification object detection speech recognition generation certification }

- Input data: { tabular text image audio video }

- Licence: MIT

- Languages: { Python }

- References:

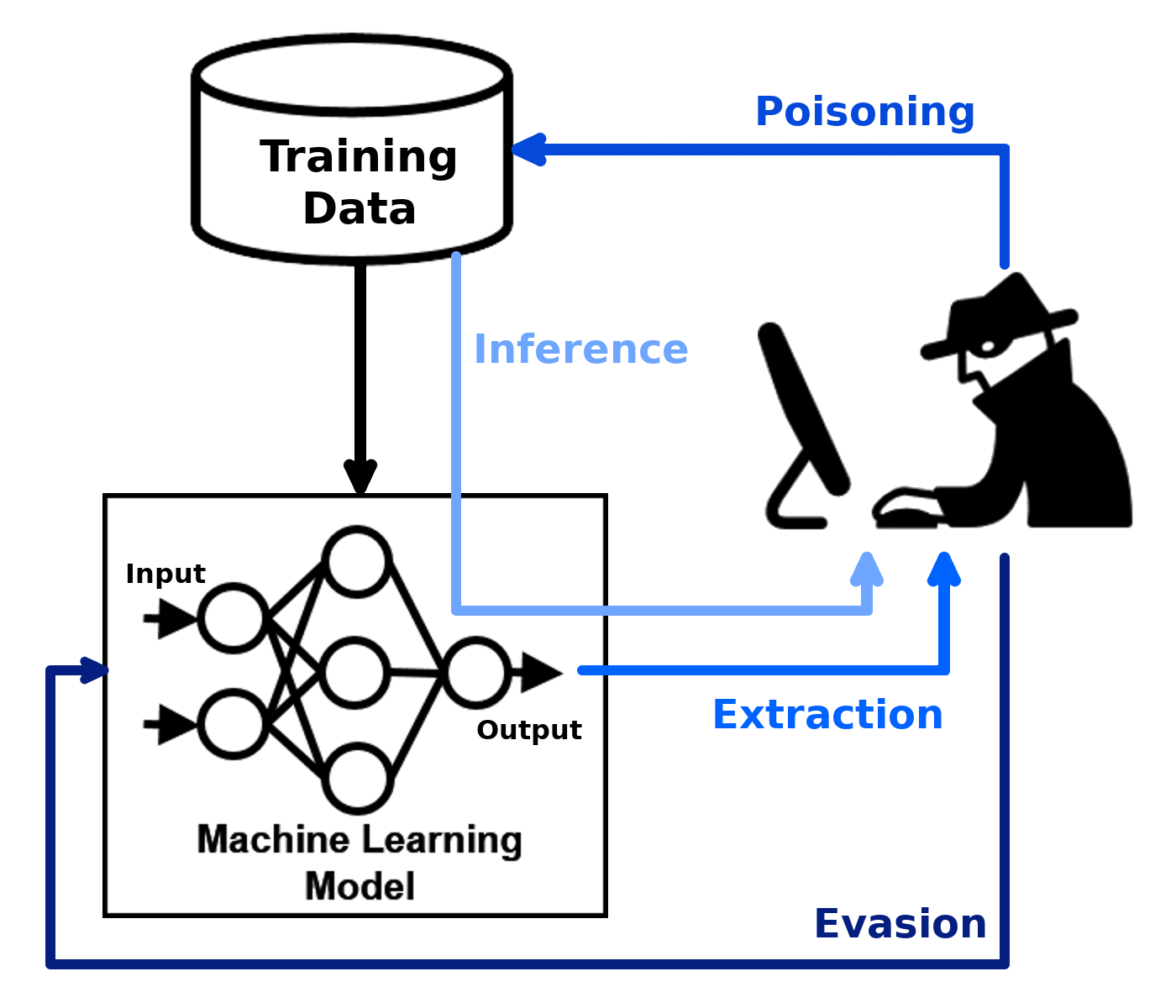

The Adversial Robustness Toolbox (ART) is the first comprehensive toolbox that unifies many defensive techniques for four categories of adversarial attacks on machine learning models. These categories are model evasion, model poisoning, model extraction and inference (e.g. inference of sensitive attributes in the training data; or determining whether an example was part of the training data). ART supports all popular machine learning frameworks, all data types and all machine learning tasks.

The documentation of ART is excellent because a whole wiki is hosted on the github repository. On the github repostory you can find an extensive explanation of the different categories of attacks with paper references for many concrete attacks. Many of these attacks are also implemented in ART. An overview of defences is also provided. Some attacks assume white box models, whereas others assume black box models. The toolbox addresses both model-specific and model-agnostic techniques.

Additionally, ART provides tools (such as metrics) to evaluate the robustness of machine learning methods and applications.

With all techniques combined, ART provides offensive and defensive techniques for each stage of the machine learning pipeline.