Aequitas: Bias and Fairness Audit Toolkit

- Values: {

fairness

}

- Fairness type: { group fairness }

- Categories: { model-agnostic }

- Stage: { preprocessing post-processing }

- Repository: https://github.com/dssg/aequitas

- Tasks: { classification }

- Input data: { tabular }

- Licence: MIT

- Languages: { Python }

- References:

Audit

The Aequitas toolkit can both be used on the command-line, programmatically via its Python API or via a web interface. The web interface offers a four step programme to audit a dataset on bias. The four steps are:

- Upload (tabular) data

- Determine protected groups and reference group

- Select fairness metrics and disparity intolerance

- Inspect bias report

This toolkit is useful for auditing bias and fairness according to a limited set of common fairness metrics, but does not offer algorithms for mitigating bias. It thus only requires input data and model outputs and does not intervene in the training of the model itself.

Fairness metrics

The amount of supported fairness metrics is relatively limited compared to other fairness toolkits. They are about representatitivess and error rates per group, in this case relative to a reference group.

The following disparities are computed in the web interface:

- Equal Parity

- Proportional Parity

- False Positive Rate Parity

- False Discovery Rate Parity

- False Negative Rate Parity

- False Omission Rate Parity

With the Python API you can compute a few closely interrelated disparaties.

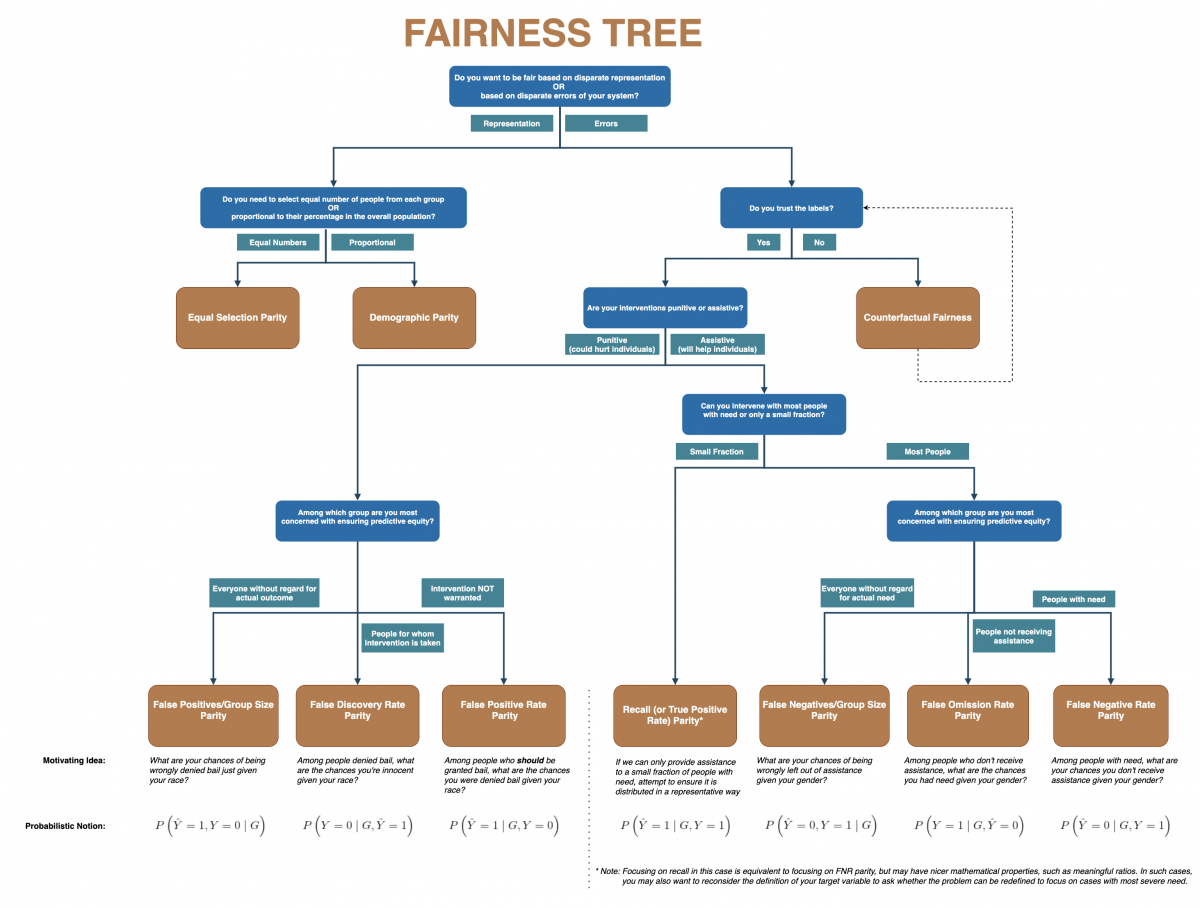

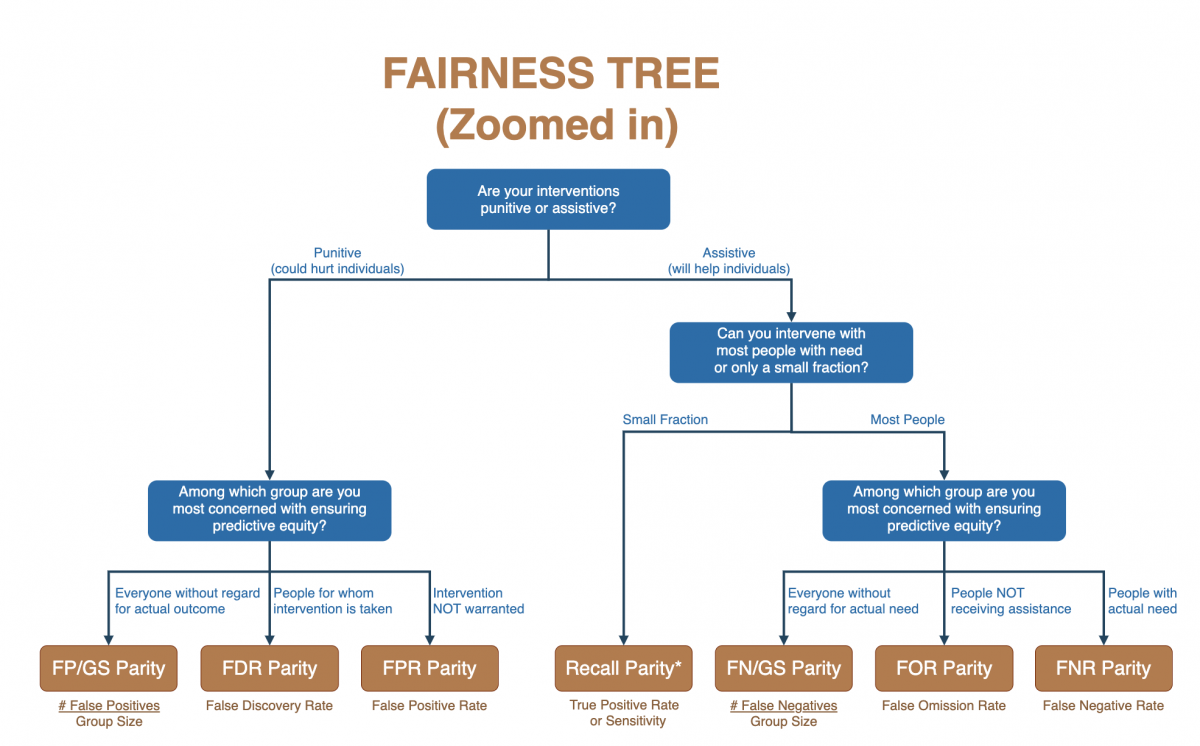

Fairness tree

What’s particularly nice about this toolkit is the provided fairness tree , which can also be very helpful in combination with other toolkits.